Difference between revisions of "GPU identifiers"

(Created page with " The GPUs accessible by your system in an interactive session (i.e., assuming you can directly ssh onto the node where the GPUs are installed) can be shown with {{t|nvidia-smi...") |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | The GPUs accessible by your system in an interactive session (i.e., assuming you can directly ssh onto the node where the GPUs are installed) can be shown with {{t|nvidia-smi}}. | + | The GPUs accessible by your system in an interactive session (i.e., assuming you can directly ssh onto the node where the GPUs are installed) can be shown with {{t|nvidia-smi}}. The system assigns an integer number to each device CUDA can talk to. |

Remember that some GPUs are dual devices. A single NIVIDIA K80 will show as two different devices, with two different integer numbers. | Remember that some GPUs are dual devices. A single NIVIDIA K80 will show as two different devices, with two different integer numbers. | ||

| + | |||

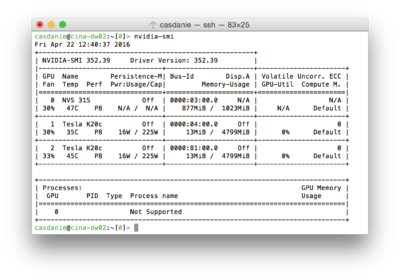

| + | [[File:Nvidia-smi example.png|thumb|center|400px| A screenshot on <tt>nvidia-smi</tt> showing three devices]] | ||

==Setting the identifiers== | ==Setting the identifiers== | ||

| Line 13: | Line 15: | ||

In the [[dcp GUI]] go to the [[computing environment GUI]]. In the GPU panel there is an edit field for the gpu identifiers. | In the [[dcp GUI]] go to the [[computing environment GUI]]. In the GPU panel there is an edit field for the gpu identifiers. | ||

| − | ===Through the command line== | + | ===Through the command line=== |

The parameter is called {{t|gpu_identifier_set}} (short {{t|gpus}}). You can set it into a project using {{t|dvput}}, for instance: | The parameter is called {{t|gpu_identifier_set}} (short {{t|gpus}}). You can set it into a project using {{t|dvput}}, for instance: | ||

| Line 21: | Line 23: | ||

==Environment variable== | ==Environment variable== | ||

In some rare systems, you might to be required to manually set the environment variable {{t|CUDA_VISIBLE_DEVICES}} to the device numbers that you already selected inside ''Dynamo''. | In some rare systems, you might to be required to manually set the environment variable {{t|CUDA_VISIBLE_DEVICES}} to the device numbers that you already selected inside ''Dynamo''. | ||

| + | |||

| + | <tt>export CUDA_VISIBLE_DEVICES="1"</tt> | ||

| + | |||

| + | or | ||

| + | |||

| + | <tt>export CUDA_VISIBLE_DEVICES="0,2"</tt> | ||

Latest revision as of 09:17, 25 October 2017

The GPUs accessible by your system in an interactive session (i.e., assuming you can directly ssh onto the node where the GPUs are installed) can be shown with nvidia-smi. The system assigns an integer number to each device CUDA can talk to.

Remember that some GPUs are dual devices. A single NIVIDIA K80 will show as two different devices, with two different integer numbers.

Contents

Setting the identifiers

You need to make certain that the identifiers that you pass to the project are correct. During unfolding, Dynamowill not check that the device numbers are correct!

On the GUI

In the dcp GUI go to the computing environment GUI. In the GPU panel there is an edit field for the gpu identifiers.

Through the command line

The parameter is called gpu_identifier_set (short gpus). You can set it into a project using dvput, for instance:

dvput myProject gpus [0,1,2,3]

Environment variable

In some rare systems, you might to be required to manually set the environment variable CUDA_VISIBLE_DEVICES to the device numbers that you already selected inside Dynamo.

export CUDA_VISIBLE_DEVICES="1"

or

export CUDA_VISIBLE_DEVICES="0,2"